本期推荐的PiFlow包含丰富的处理器组件,提供Shell、DSL、Web配置界面、任务调度、任务监控等功能。

项目特性

- 简单易用

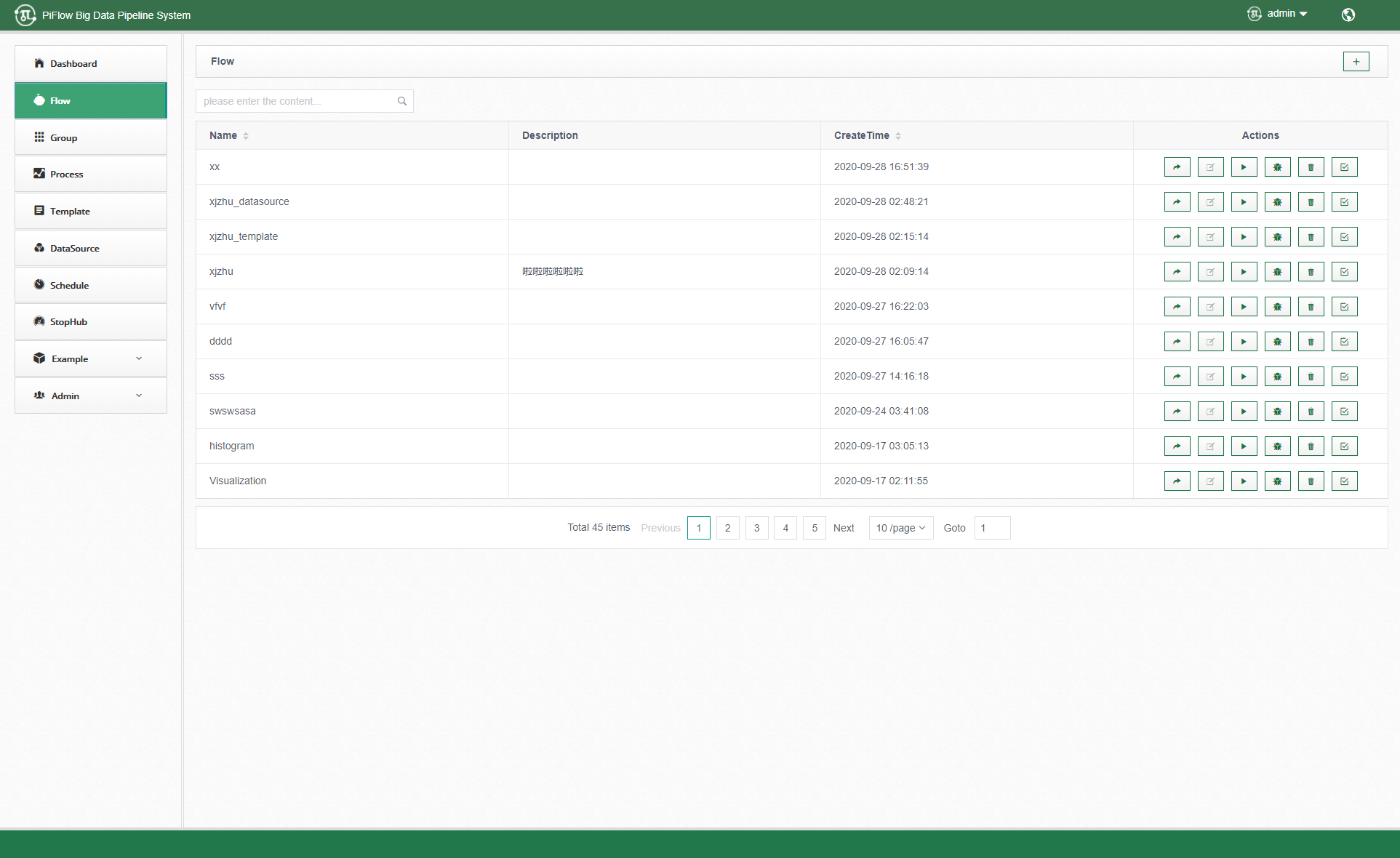

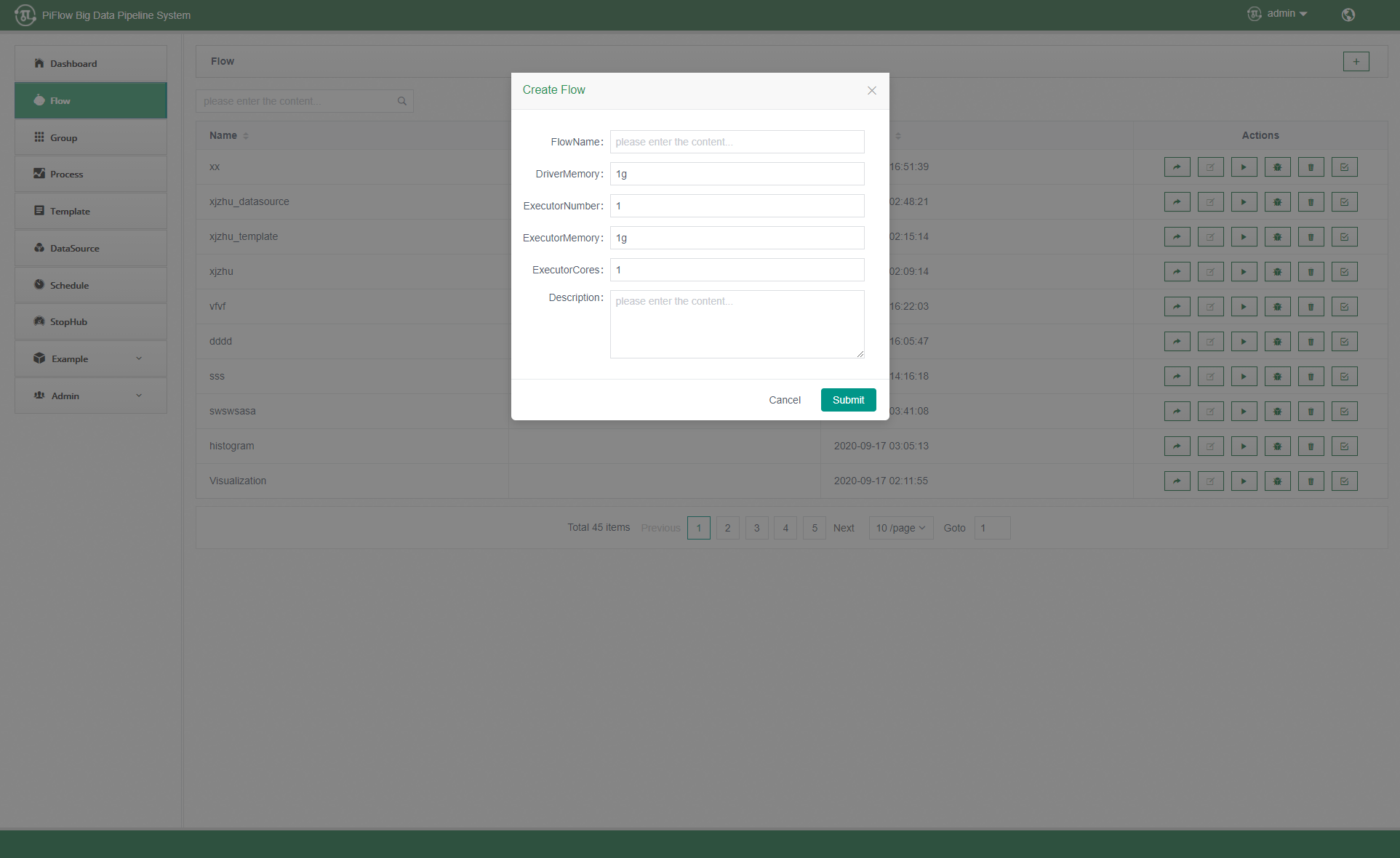

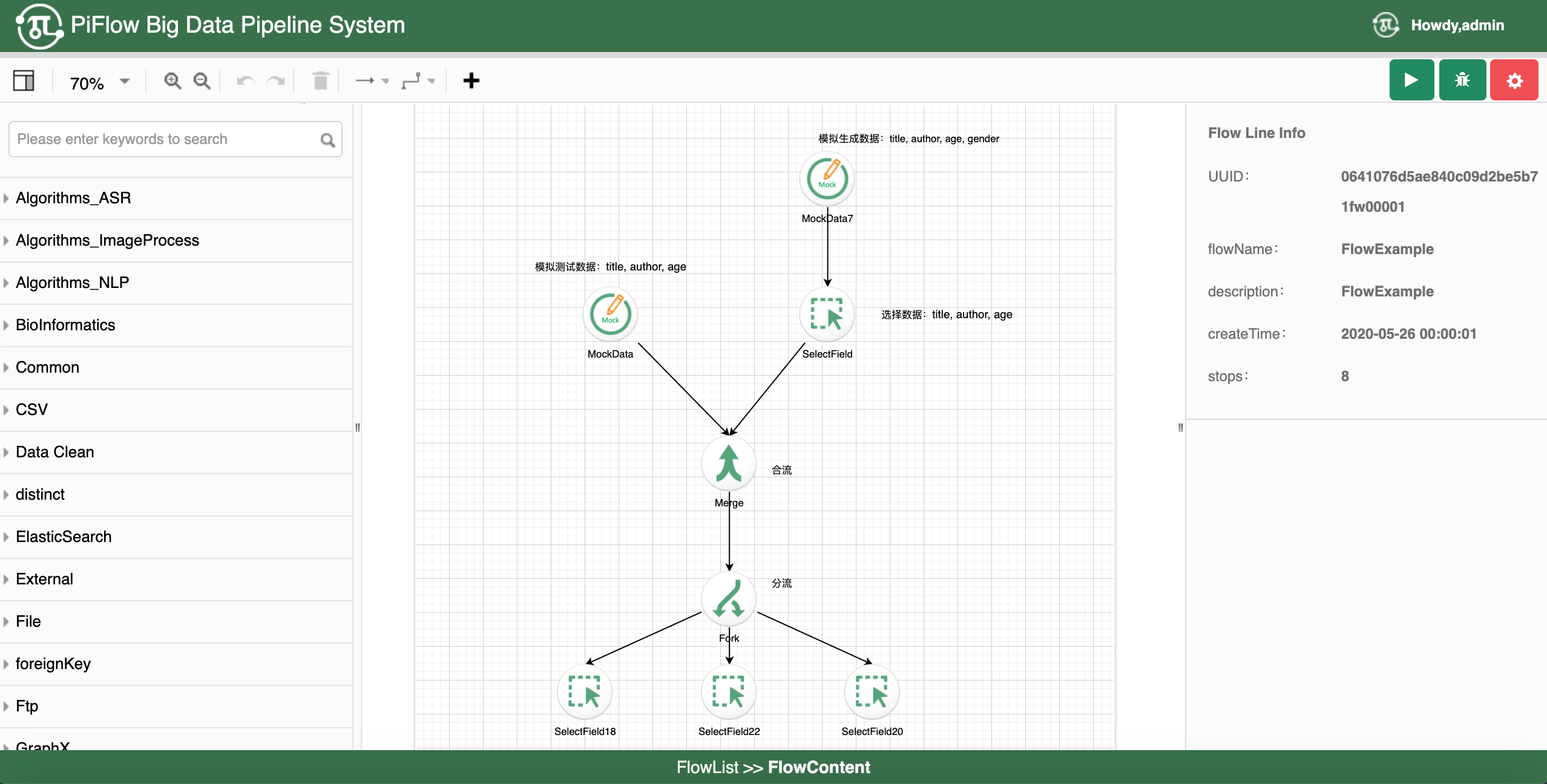

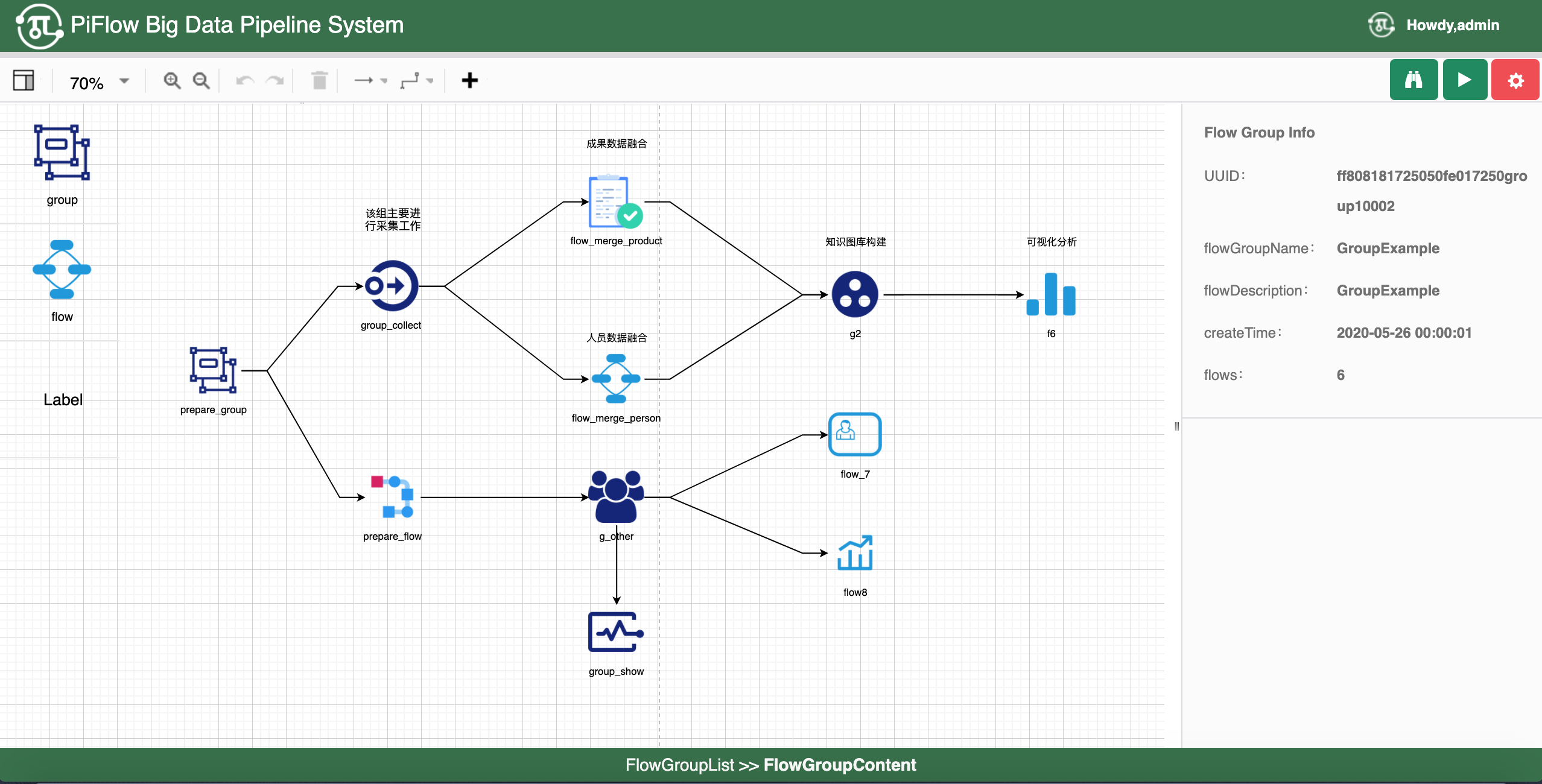

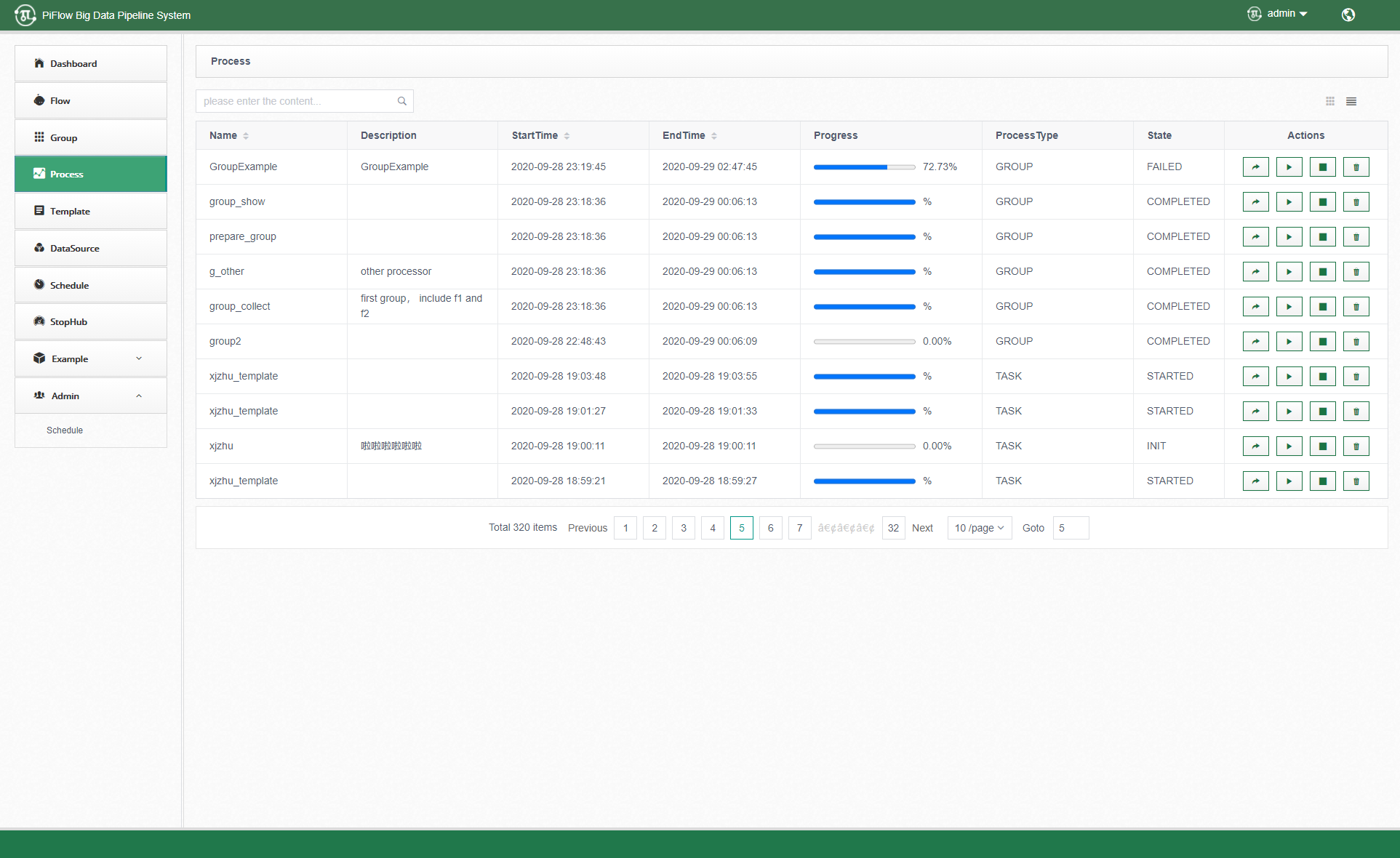

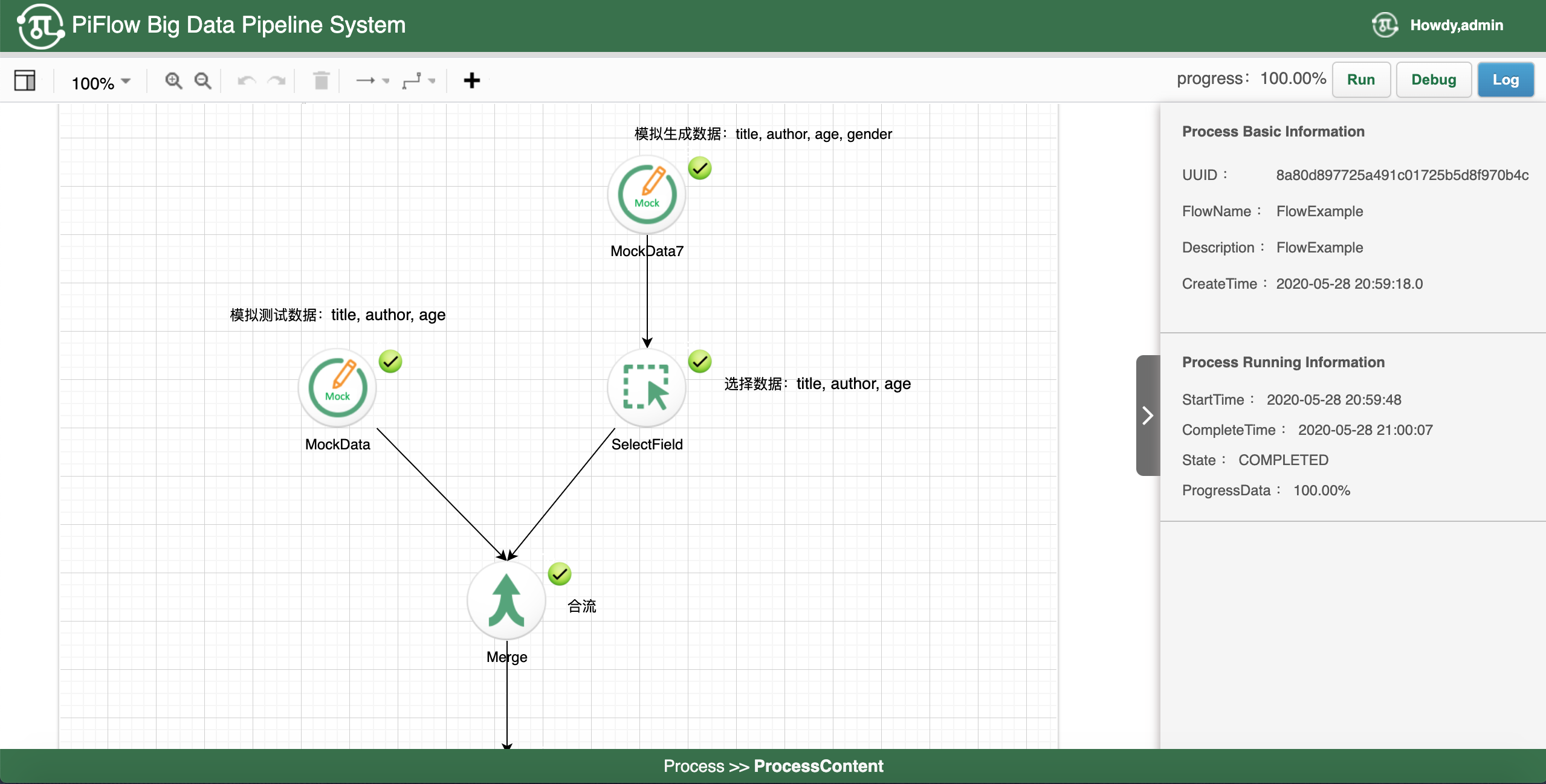

可视化配置流水线监控流水线查看流水线日志检查点功能流水线调度

- 扩展性强

支持自定义开发数据处理组件

- 性能优越

基于分布式计算引擎Spark开发

- 功能强大

提供100+的数据处理组件包括Hadoop 、Spark、MLlib、Hive、Solr、Redis、MemCache、ElasticSearch、JDBC、MongoDB、HTTP、FTP、XML、CSV、JSON等集成了微生物领域的相关算法

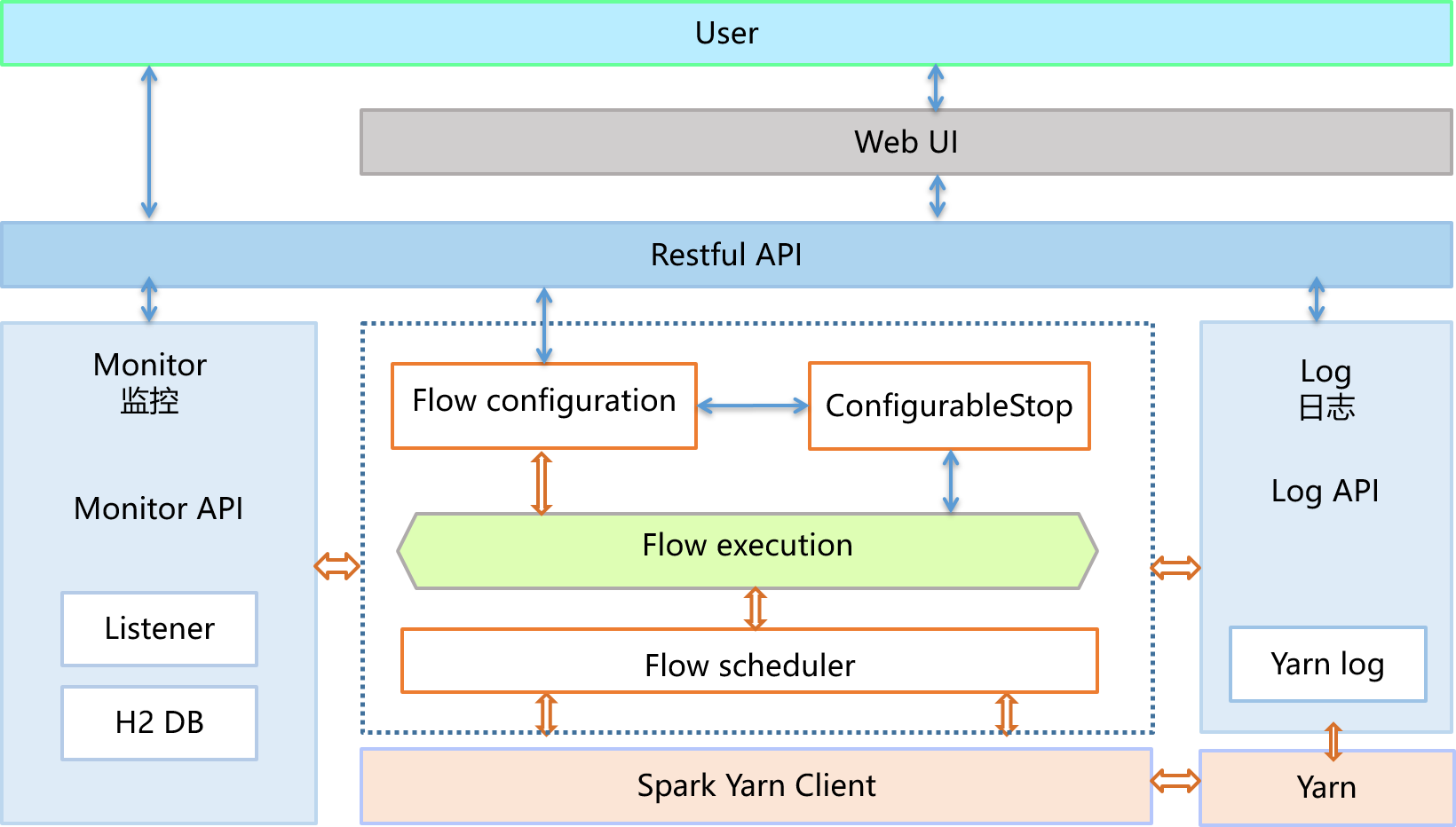

架构图

环境

- JDK 1.8

- Scala-2.11.8

- Apache Maven 3.1.0

- Spark-2.1.0 及以上版本

- Hadoop-2.6.0

开始使用

Build PiFlow:

install external package mvn install:install-file -Dfile=/../piflow/piflow-bundle/lib/spark-xml_2.11-0.4.2.jar -DgroupId=com.databricks -DartifactId=spark-xml_2.11 -Dversion=0.4.2 -Dpackaging=jar

mvn install:install-file -Dfile=/../piflow/piflow-bundle/lib/java_memcached-release_2.6.6.jar -DgroupId=com.memcached -DartifactId=java_memcached-release -Dversion=2.6.6 -Dpackaging=jar

mvn install:install-file -Dfile=/../piflow/piflow-bundle/lib/ojdbc6-11.2.0.3.jar -DgroupId=oracle -DartifactId=ojdbc6 -Dversion=11.2.0.3 -Dpackaging=jar

mvn install:install-file -Dfile=/../piflow/piflow-bundle/lib/edtftpj.jar -DgroupId=ftpClient -DartifactId=edtftp -Dversion=1.0.0 -Dpackaging=jarmvn clean package -Dmaven.test.skip=true [INFO] Replacing original artifact with shaded artifact.

[INFO] Reactor Summary:

[INFO]

[INFO] piflow-project ..................................... SUCCESS [ 4.369 s]

[INFO] piflow-core ........................................ SUCCESS [01:23 min]

[INFO] piflow-configure ................................... SUCCESS [ 12.418 s]

[INFO] piflow-bundle ...................................... SUCCESS [02:15 min]

[INFO] piflow-server ...................................... SUCCESS [02:05 min]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 06:01 min

[INFO] Finished at: 2020-05-21T15:22:58+08:00

[INFO] Final Memory: 118M/691M

[INFO] ------------------------------------------------------------------------运行 Piflow Server:

Intellij上运行PiFlow Server:

- 下载 piflow: git clone https://github.com/cas-bigdatalab/piflow.git

- 将PiFlow导入到Intellij

- 编辑配置文件config.properties

Build PiFlow jar包:

Run --> Edit Configurations --> Add New Configuration --> Maven

Name: package

Command line: clean package -Dmaven.test.skip=true -X

run 'package' (piflow jar file will be built in ../piflow/piflow-server/target/piflow-server-0.9.jar)运行 HttpService:

Edit Configurations --> Add New Configuration --> Application

Name: HttpService

Main class : cn.piflow.api.Main

Environment Variable: SPARK_HOME=/opt/spark-2.2.0-bin-hadoop2.6(change the path to your spark home)

run 'HttpService'测试 HttpService:

运行样例流水线: ../piflow/piflow-server/src/main/scala/cn/piflow/api/HTTPClientStartMockDataFlow.scala

需要修改API中的server ip 和 port如何配置config.properties

#spark and yarn config

spark.master=yarn

spark.deploy.mode=cluster

#hdfs default file system

fs.defaultFS=hdfs://10.0.86.191:9000

#yarn resourcemanager.hostname

yarn.resourcemanager.hostname=10.0.86.191

#if you want to use hive, set hive metastore uris

#hive.metastore.uris=thrift://10.0.88.71:9083

#show data in log, set 0 if you do not want to show data in logs

data.show=10

#server port

server.port=8002

#h2db port

h2.port=50002运行PiFlow Web请到如下链接,PiFlow Server 与 PiFlow Web版本要对应:

https://github.com/cas-bigdatalab/piflow-web/releases/tag/v1.0

Docker镜像

- 拉取Docker镜像

docker pull registry.cn-hangzhou.aliyuncs.com/cnic_piflow/piflow:v1.1 - 查看Docker镜像的信息

docker images - 通过镜像Id运行一个Container,所有PiFlow服务会自动运行。请注意设置HOST_IP

docker run -h master -itd –env HOST_IP=*.*.*.* –name piflow-v1.1 -p 6001:6001 -p 6002:6002 [imageID] - 访问 “HOST_IP:6001”, 启动时间可能有些慢,需要等待几分钟

- if somethings goes wrong, all the application are in /opt folder